Automated Racism

CAN POLICE “PREDICT CRIME”?

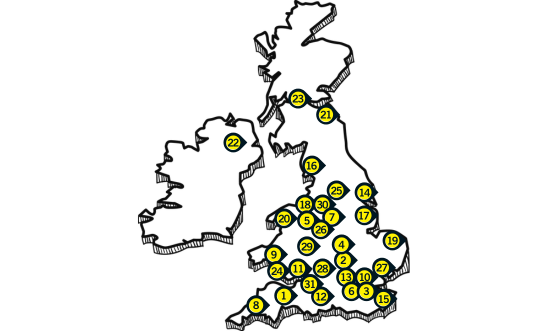

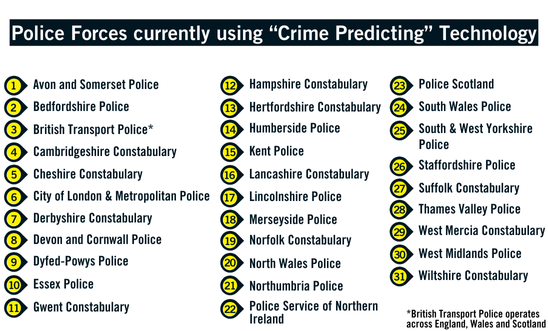

Amnesty International UK's new report Automated Racism exposes how almost three-quarters of UK police forces are using technology to try to “predict crime” - with little regard for our human rights. They call it "predictive policing".

This technology used by police decides that our neighbours are suspects, purely based on:

- the colour of their skin

- where they live

- their socio-economic background.

This is racial profiling. It’s automated racism. But we can stop it.

|

"We’ve had young people as young as 15 put in handcuffs on the floor, just because they might have been riding a bike… We see this massive, disproportionate use of force, and it is justified by the fact that Graham Park has been labelled a ‘crime hotspot’." - Hope Chilokoa-Mullen of The 4Front Project |

Help stop Automated Racism

We are calling on the Minister for Policing, Fire and Crime Prevention, Diana Johnson MP, to prohibit automated and ‘predictive’ policing systems in England and Wales.

Where is "predictive policing" technology being used?

- London:

- City of London Police (City of London)

- Metropolitan Police Service (Greater London)

- British Transport Police (railways)

- Manchester, Birmingham, Glasgow (British Transport Police covers railways in these cities)

- Avon and Somerset Police – Bristol, Bath, Weston-super-Mare, Taunton

- Bedfordshire Police – Luton, Bedford

- British Transport Police – Operates across the UK, covering railway networks in major cities like London, Manchester, Birmingham, Glasgow, etc.

- Cambridgeshire Constabulary – Cambridge, Peterborough

- Cheshire Constabulary – Chester, Warrington, Crewe, Macclesfield

- City of London Police – City of London (distinct from Greater London)

- Derbyshire Constabulary – Derby, Chesterfield

- Devon and Cornwall Police – Exeter, Plymouth, Torquay, Truro

- Dyfed-Powys Police – Carmarthen, Aberystwyth, Newtown

- Essex Police – Chelmsford, Colchester, Southend-on-Sea

- Gwent Constabulary – Newport, Cwmbran, Abergavenny

- Hampshire Constabulary – Southampton, Portsmouth, Winchester

- Hertfordshire Constabulary – St Albans, Watford, Stevenage

- Humberside Police – Hull, Grimsby, Scunthorpe

- Kent Police – Maidstone, Canterbury, Dover

- Lancashire Constabulary – Preston, Blackpool, Blackburn

- Lincolnshire Police – Lincoln, Boston, Grantham

- Merseyside Police – Liverpool, St Helens, Birkenhead

- Metropolitan Police Service – Greater London (excluding City of London)

- Norfolk Constabulary – Norwich, King’s Lynn, Great Yarmouth

- North Wales Police – Wrexham, Bangor, Rhyl

- Northumbria Police – Newcastle upon Tyne, Sunderland, Gateshead

- Police Service of Northern Ireland – Belfast, Londonderry/Derry, Lisburn

- Police Scotland – Glasgow, Edinburgh, Aberdeen, Dundee

- South Wales Police – Cardiff, Swansea, Bridgend

- South Yorkshire Police – Sheffield, Doncaster, Rotherham

- Staffordshire Police – Stoke-on-Trent, Stafford, Burton upon Trent

- Suffolk Constabulary – Ipswich, Bury St Edmunds, Lowestoft

- Thames Valley Police – Oxford, Reading, Milton Keynes

- West Mercia Constabulary – Worcester, Hereford, Shrewsbury

- West Midlands Police – Birmingham, Coventry, Wolverhampton

- West Yorkshire Police – Leeds, Bradford, Wakefield

- Wiltshire Constabulary – Swindon, Salisbury, Trowbridge

What is “crime prediction”? What is "predictive policing"?

“Crime prediction” is when police use automated technologies for policing. It can also be called “predictive policing”.

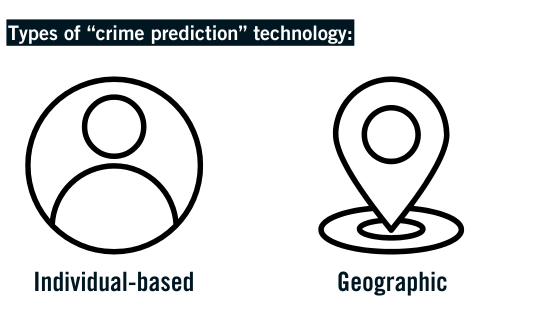

In our report Automated Racism - How police data and algorithms code discrimination into policing, we exposed how police are using two types of “crime prediction” systems:

- Individual-based, where the police use technology to predict whether a person may commit a particular crime

- Geographical, where police use tools to create “crime hotspot” maps.

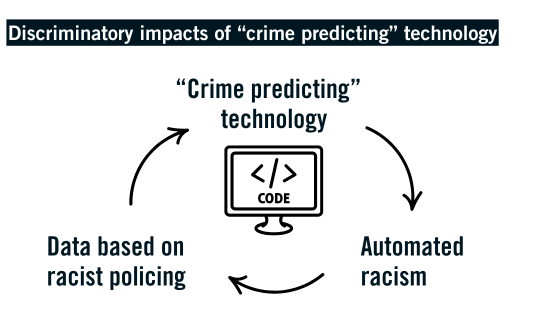

Why is it dangerous?

Policing in the UK is already biased against minoritised communities, with many forces accepting they’re institutionally racist.

So when they add data-driven technology, we get automated discrimination.

|

“Rather than 'predictive' policing, it's simply, 'predictable' policing [...] — it will always drive against those who are already marginalised.” - Dr Patrick Williams |

What is the impact of "predictive policing" technology?

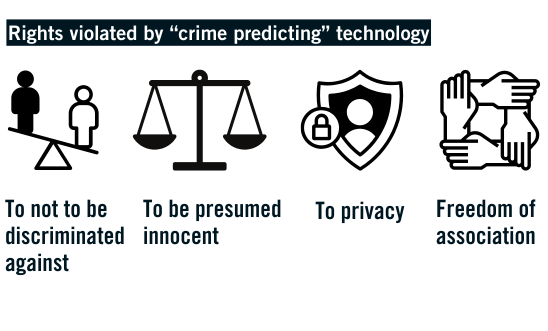

The use of this technology violates many rights and its use is in breach of the UK’s national and international human rights obligations, including:

- Right not to be discriminated against

- The use of these systems by police results in, directly and indirectly, racial profiling, and the disproportionate targeting of Black and racialised people and people from lower socio-economic backgrounds.

- Right to a fair trial and the presumption of innocence

- It targets people and groups before they have actually offended, which risks infringing on the presumption of innocence and the right to a fair trial.

- Right to privacy

- This is indiscriminate mass surveillance. Mass surveillance can never be proportionate interference with the rights to privacy, freedom of expression, freedom of association and of peaceful assembly.

- Freedom of association

- People who live and reside in areas targeted by predictive policing will seek to avoid those areas as a result, leading to a chilling effect.